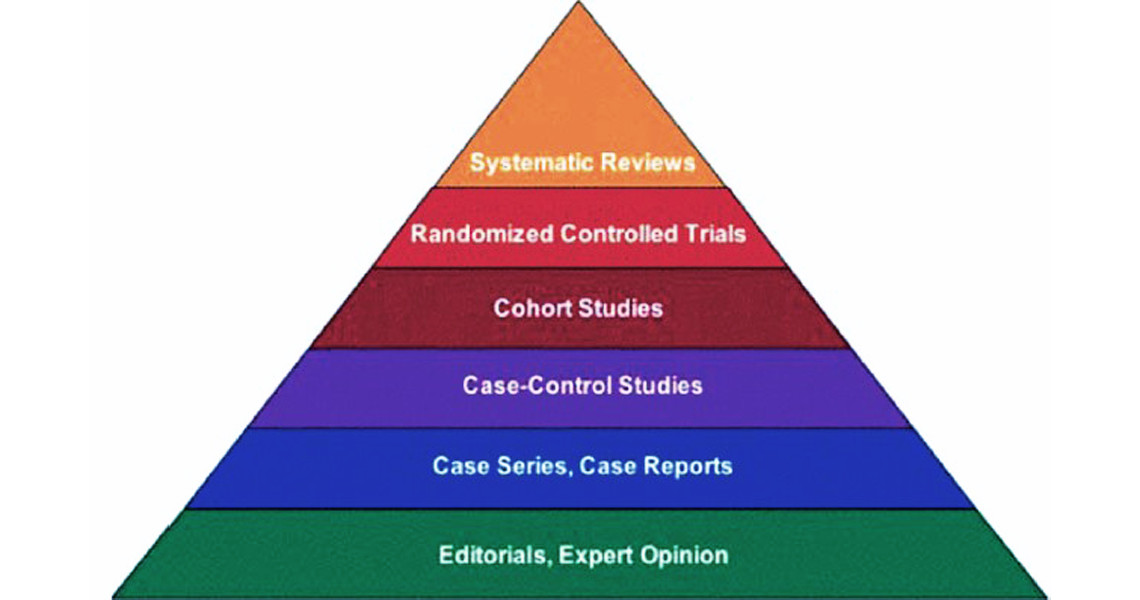

The hierarchy of evidence as described by The GRADE Working Group. Figure via Indian Journal of Urology.

The hierarchy of evidence as described by The GRADE Working Group. Figure via Indian Journal of Urology.

What should we do to improve health in our city? How can we shift the health of whole populations so that everyone is healthier and inequalities in health by race, ethnicity or social class are reduced? This is the big question for public health, something we are often asked but regrettably often don't have good answers for.

In the traditional medical approach focused on improving the health of individual patients, the answers are (at least apparently), much simpler to obtain. But even in traditional medical care the focus on evidence-based practice is relatively recent, and in fact many medical interventions and treatments continue to be based on historical practice, assumptions, and belief rather than rigorous scientific evidence of what works. The movement called evidence-based medicine which began in the late 80s was viewed as revolutionary by many and even strongly resisted by some. Nevertheless it ushered in an era in which medical practice gradually began to rely more strongly on randomized clinical trials, and meta-analyses of randomized clinical trials, to justify treatment decisions.

The randomized trial is often highlighted as the gold standard for causal inference. Various formulations of the “hierarchy of evidence” place randomized trials at the very top, and observational studies in which treatments or other exposures of interest are not randomized, at the bottom. Observational studies are often somewhat disparagingly referred to as “correlational studies”. This view pretty much discounts virtually all of the studies we have on the social determinants of health (as well as on many individual-level risk factors) as inconclusive and not robust enough to support any sort of causal conclusion or intervention based on those conclusions.

But even randomized trials have important limitations regarding generalizability and the possibility that effects are heterogeneous across populations because they are modified by other factors. The growing emphasis on translational and implementation science is a recognition of the important limitations inherent in assuming that the results of randomized trials are generalizable to contexts different from the idealized experiment.

The bigger challenge however is that the types of causal questions and the kinds of interventions that are of greatest interest in improving public health are either impossible or very difficult to study via randomized trials. Clever uses of observational data can take advantages of “natural” experiments in which allocation of the intervention or policy is close to random. Sophisticated analytical approaches like instrumental variables can also be used to hopefully mimic the conclusions that could be drawn from randomized experiments using observational data. Sometimes simulation modeling can be used to understand the implications of several pieces of evidence acting together, especially when feedbacks or contagion-like effects (be it contagion of germs or of behaviors) are involved.

Our job is much tougher than understanding whether a pill works or not. When we attempt to improve population health we are intervening on a system. And sometimes, in the context of a complex system, an intervention may have multiple effects. Some of these may be very long term, reinforce or cancel each other out, or even interact in complex ways with other factors. These dynamics are very difficult to understand through randomized trials alone, even in situations where whole populations can be randomized.

Consider the soda tax being proposed in Philadelphia. A randomized trial although conceivable would be virtually impossible. And the impact of the tax is likely to be complex and occur over a very long period. Aside from the effects of the tax on actual soda consumption and its potential direct health consequences down the road (which may be very difficult to capture in short-term evaluations), the use of tax money to support education could have important long term indirect effects on a range of other health outcomes that we know are linked to early childhood conditions.

In public health we need to think about evidence in a much more integrated way. Randomized trials are important but so is clever use of observational data. How could we study the impact of discrimination on health if not through observation? We did not need a randomized trial to conclude that smoking causes lung cancer. The utility of banning smoking in public spaces was not established via a randomized trial.

Of course, in order to protect the health of the public we often need to make judgment calls and take action in the face of incomplete evidence. Subsequent evaluation so that we can learn what works and what doesn't is key. The evaluation of action can also illuminate our basic understanding of causes that we can subsequently use to propose new interventions. Action itself can be thought of as an experiment and through careful evaluation we can learn not only about whether the intervention “worked” but whether it had unanticipated effects. Rather than the hierarchy of evidence, public heath is about the circle of evidence in which multiple sources feed into and complement each other. The interrelatedness between research and action and the need to integrate different types of evidence is at the core of public health. This is what makes the field complex and challenging but also so socially relevant.